Vocabulary-free Image Classification

Recent advances in large vision-language models have revolutionized the image classification paradigm. Despite showing impressive zero-shot capabilities, a pre-defined set of categories, a.k.a. the vocabulary, is assumed at test time for composing the textual prompts. However, such assumption can be impractical when the semantic context is unknown and evolving. We thus formalize a novel task, termed as Vocabulary-free Image Classification (VIC), where we aim to assign to an input image a class that resides in an unconstrained language-induced semantic space, without the prerequisite of a known vocabulary. VIC is a challenging task as the semantic space is extremely large, containing millions of concepts, with hard-to-discriminate fine-grained categories. In this work, we first empirically verify that representing this semantic space by means of an external vision-language database is the most effective way to obtain semantically relevant content for classifying the image. We then propose Category Search from External Databases (CaSED), a method that exploits a pre-trained vision-language model and an external vision-language database to address VIC in a training-free manner. CaSED first extracts a set of candidate categories from captions retrieved from the database based on their semantic similarity to the image, and then assigns to the image the best matching candidate category according to the same vision-language model. Experiments on benchmark datasets validate that CaSED outperforms other complex vision-language frameworks, while being efficient with much fewer parameters, paving the way for future research in this direction.

1 University of Trento

2 Fondazione Bruno Kessler (FBK)

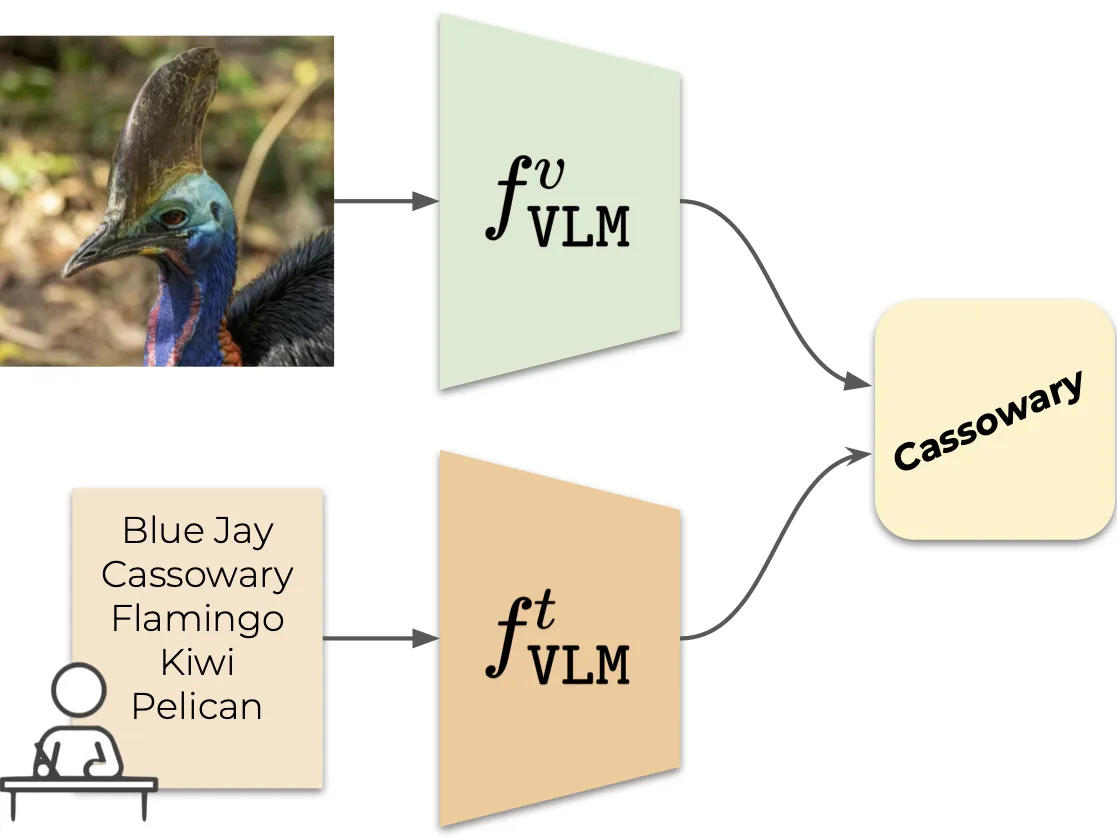

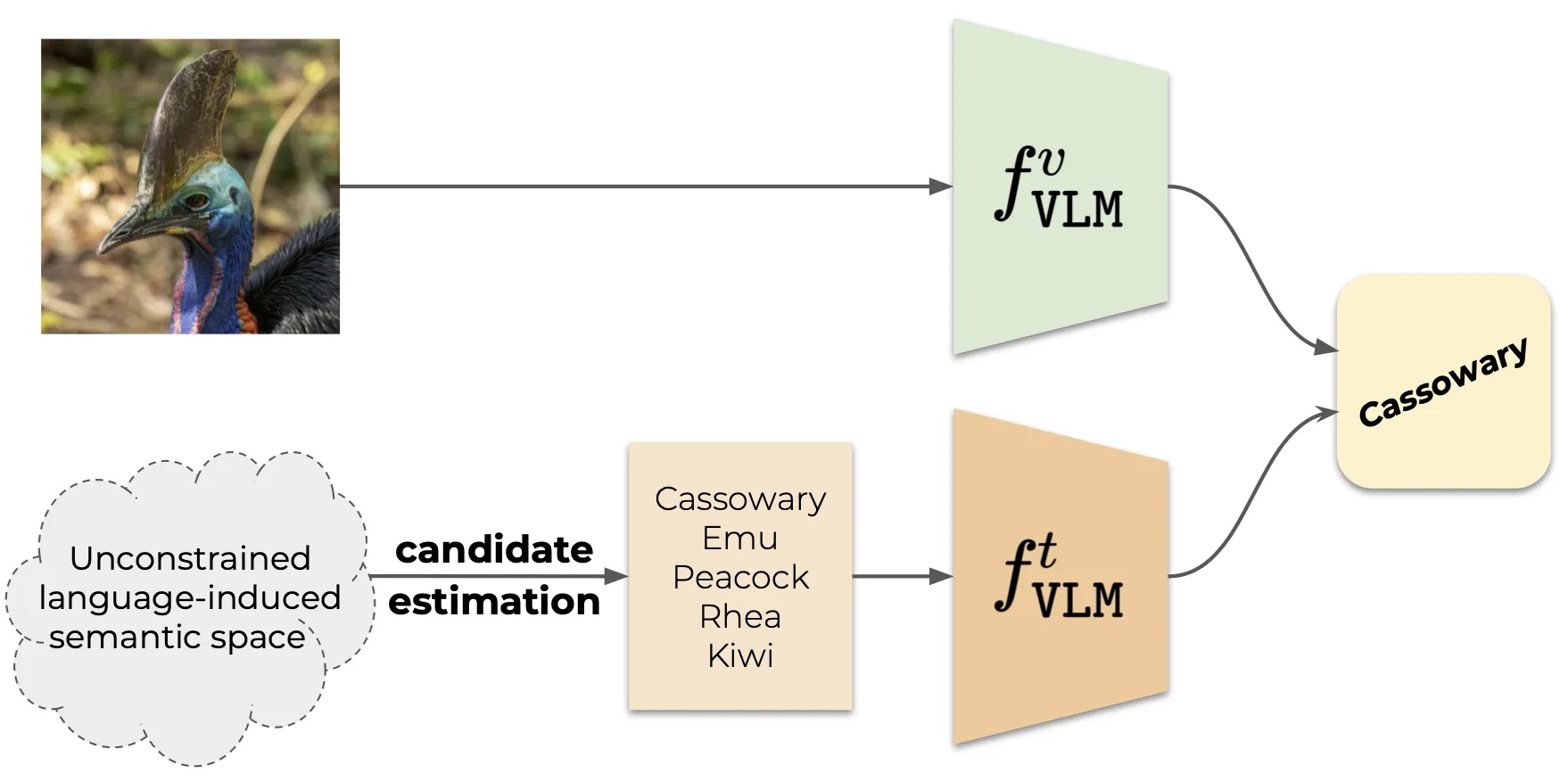

Task definition

Vocabulary-free Image Classification aims to assign a class \(c\) to an image \(x\) without prior knowledge on \(C\), thus operating on the semantic class space \(\mathcal{S}\) that contains all the possible concepts. Formally, we want to produce a function \(f\) mapping an image to a semantic label in \(\mathcal{S}\), i.e. \(f: \mathcal{X}\rightarrow \mathcal{S}\). Our task definition implies that at test time, the function \(f\) has only access to an input image \(x\) and a large source of semantic concepts that approximates \(\mathcal{S}\). VIC is a challenging classification task by definition due to the extremely large cardinality of the semantic classes in \(\mathcal{S}\). As an example, ImageNet-21k [1], one of the largest classification benchmarks, is \(200\) times smaller than the semantic classes in BabelNet [2]. This large search space poses a prime challenge for distinguishing fine-grained concepts across multiple domains as well as ones that naturally follow a long-tailed distribution.

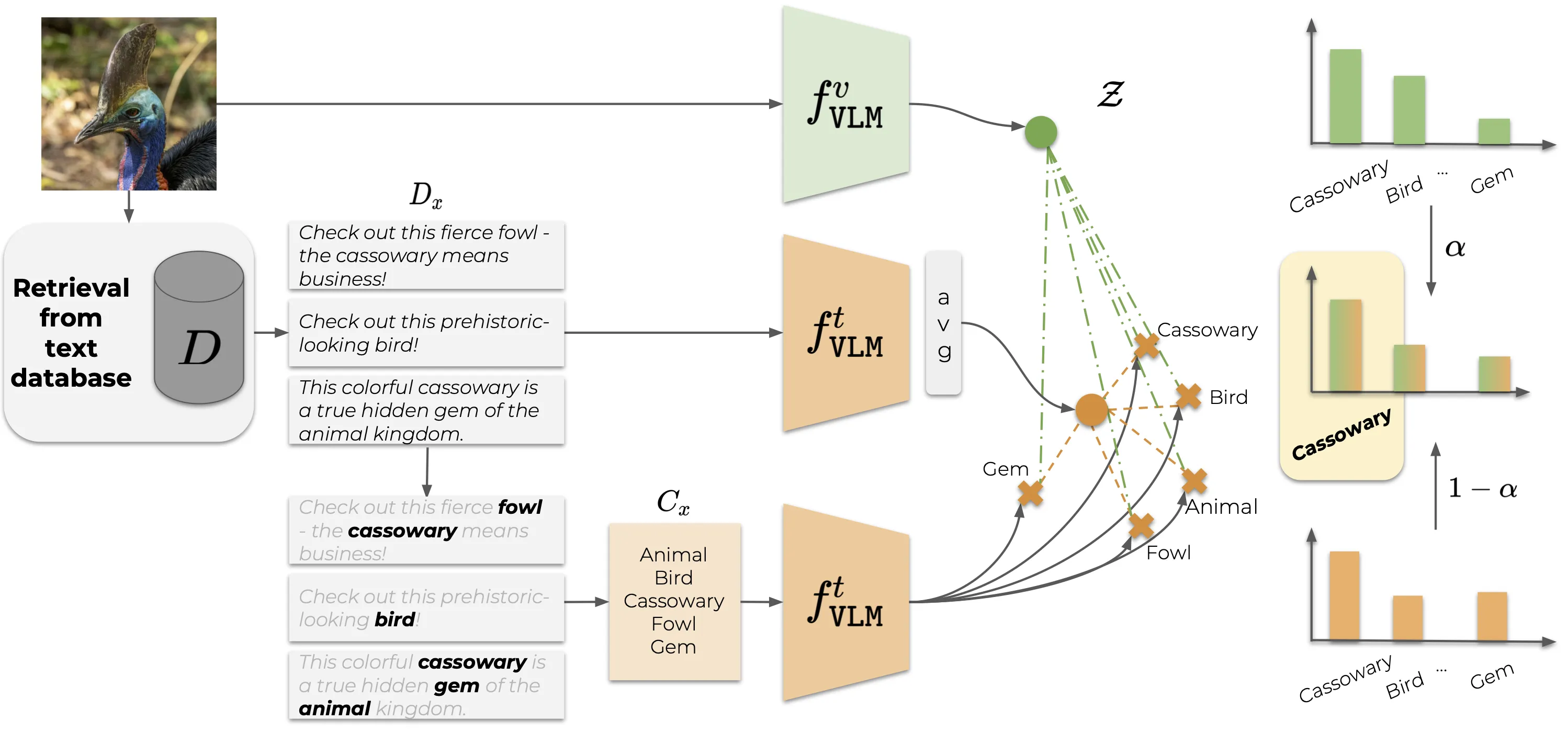

Method overview

Our proposed method CaSED finds the best matching category within the unconstrained semantic space by multimodal data from large vision-language databases. Figure 3 provides an overview of our proposed method. We first retrieve the semantically most similar captions from a database, from which we extract a set of candidate categories by applying text parsing and filtering techniques. We further score the candidates using the multimodal aligned representation of the large pre-trained VLM, i.e. CLIP [3], to obtain the best-matching category.

Experiments

Datasets

We follow existing works [4, 5] and use ten datasets that feature both coarse-grained and fine-grained classification in different domains: Caltech-101 (C101) [6], DTD [7], EuroSAT (ESAT) [8], FGVC-Aircraft (Airc.) [9], Flowers-102 (Flwr) [10], Food-101 (Food) [11], Oxford Pets (Pets), Stanford Cars (Cars) [12], SUN397 (SUN) [13], and UCF101 (UCF) [14]. Additionally, we used ImageNet [1] for hyperparameters tuning.

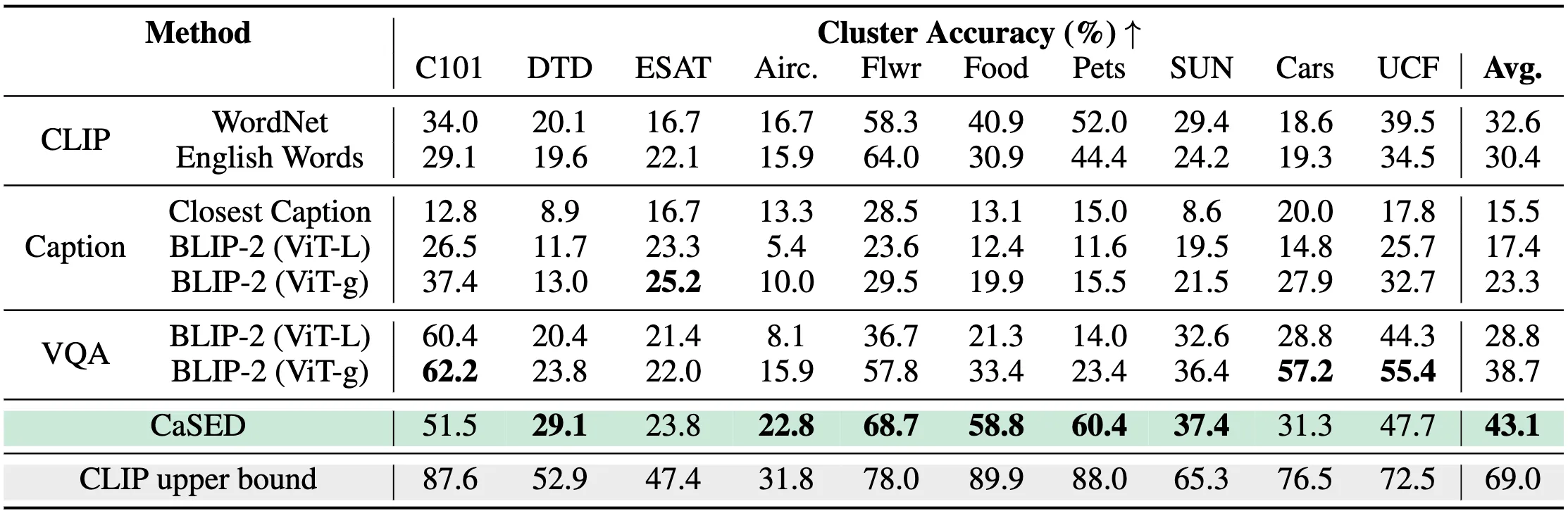

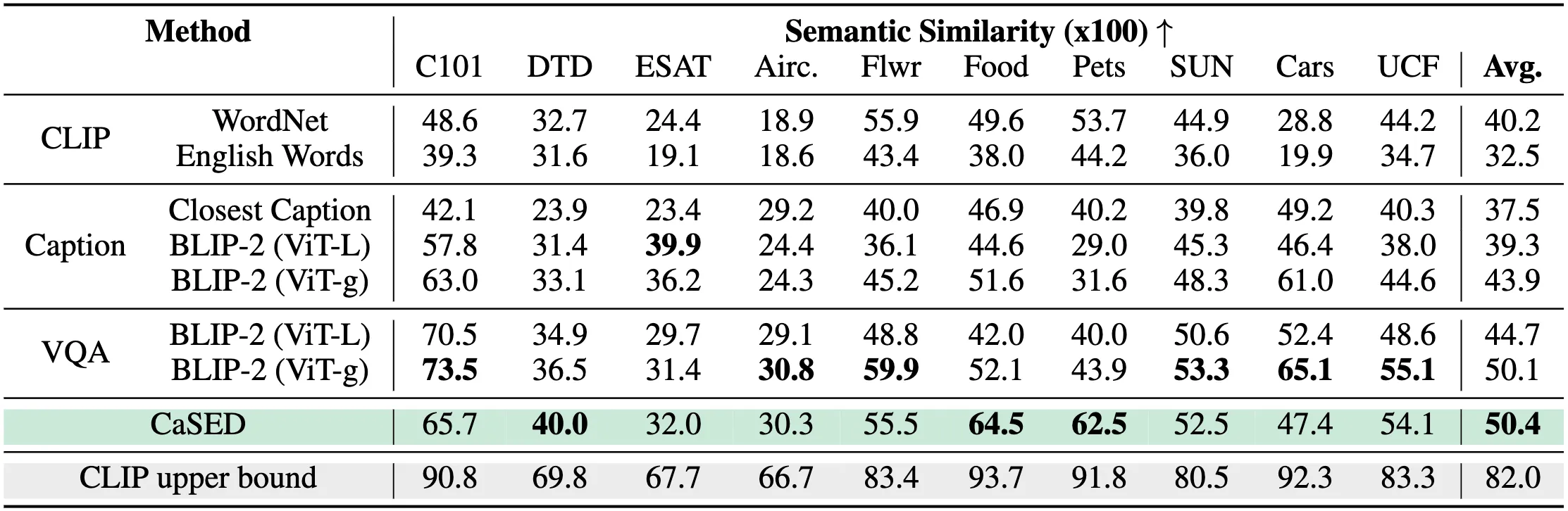

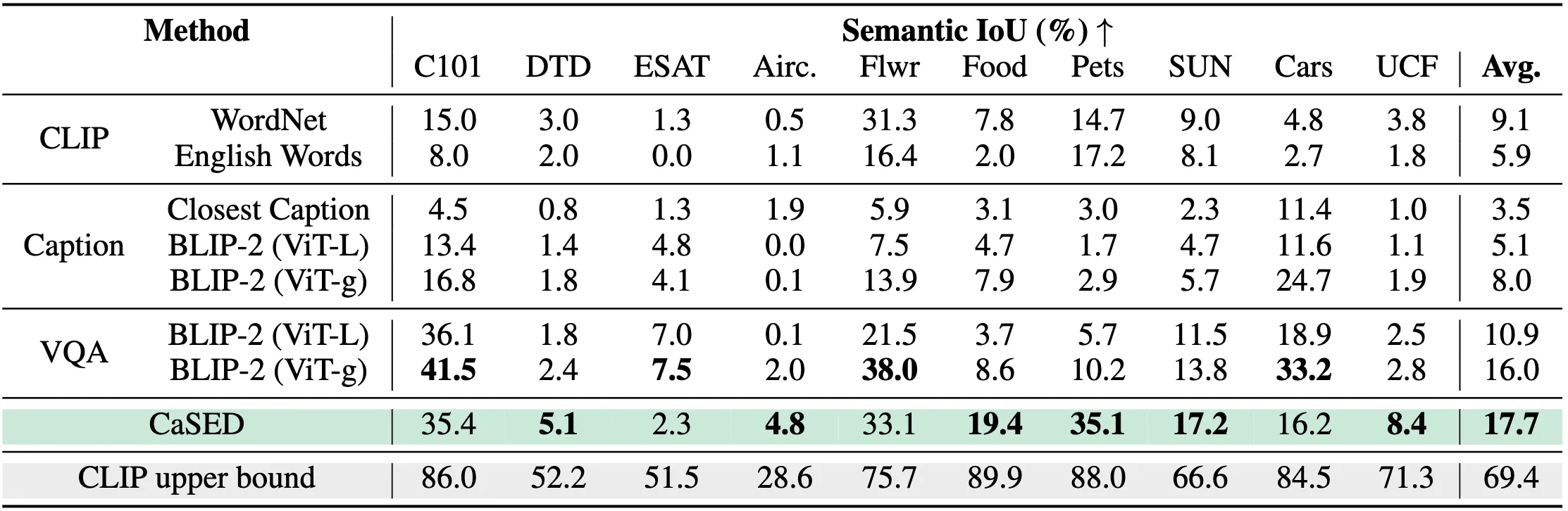

Quantitative results

We evaluate CaSED in comparison to other VLM-based methods on the novel task Vocabulary-free Image Classification with extensive benchmark datasets covering both coarse-grained and fine-grained classification.

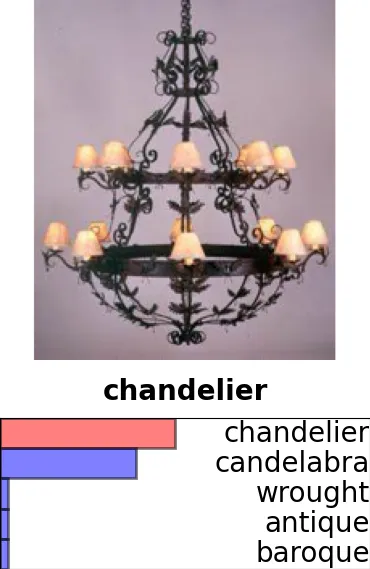

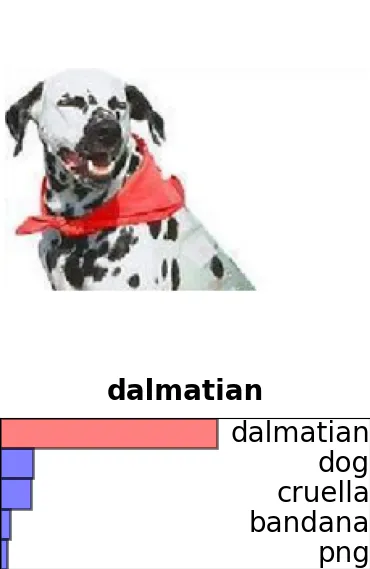

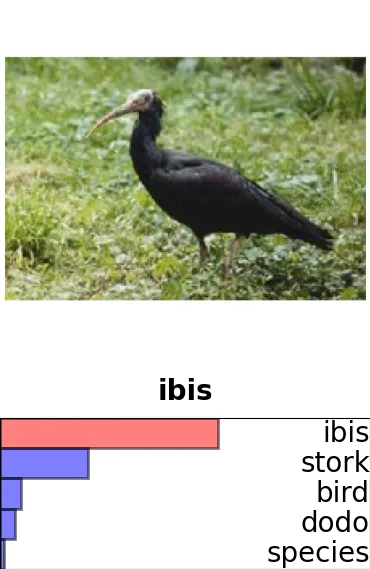

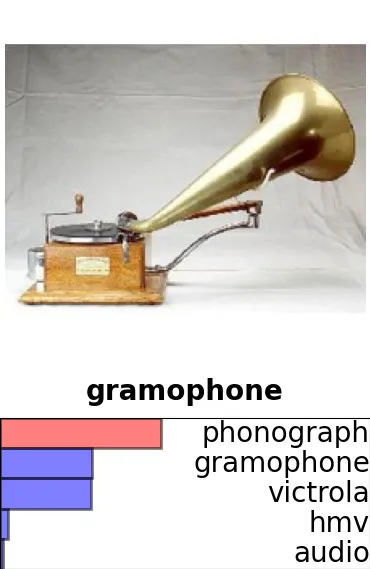

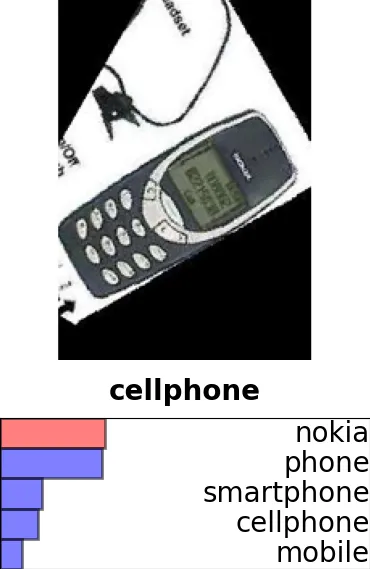

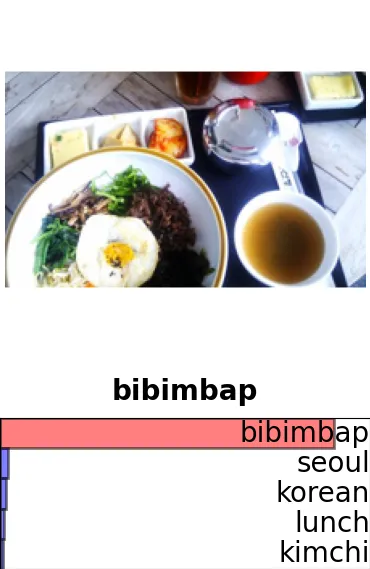

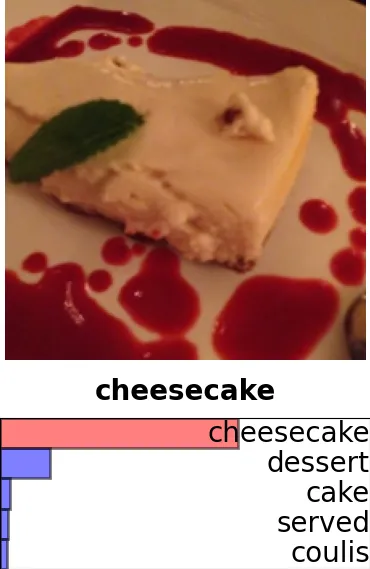

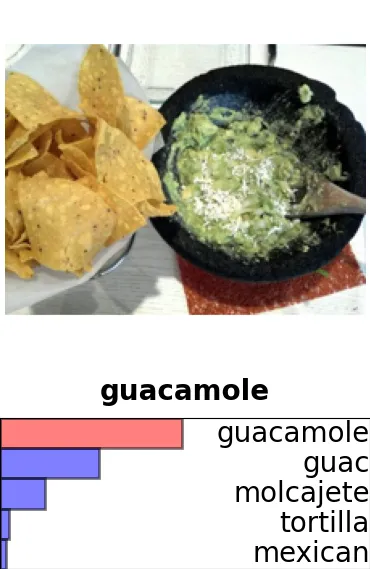

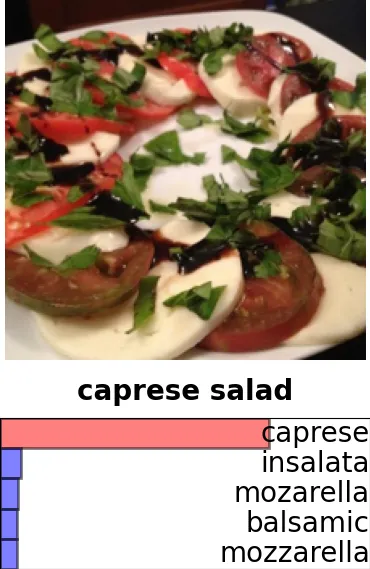

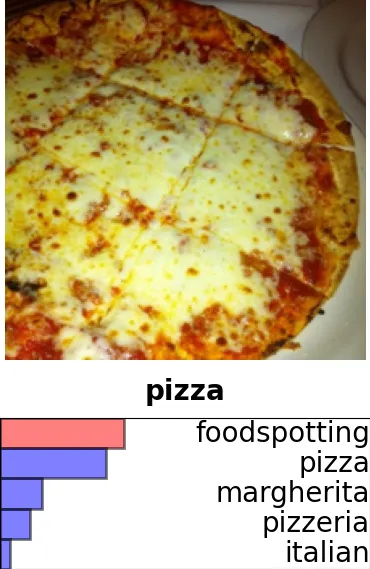

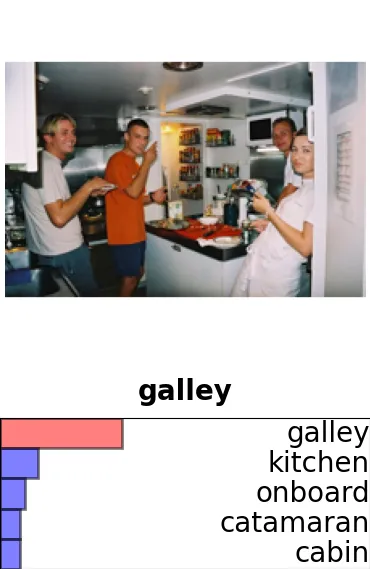

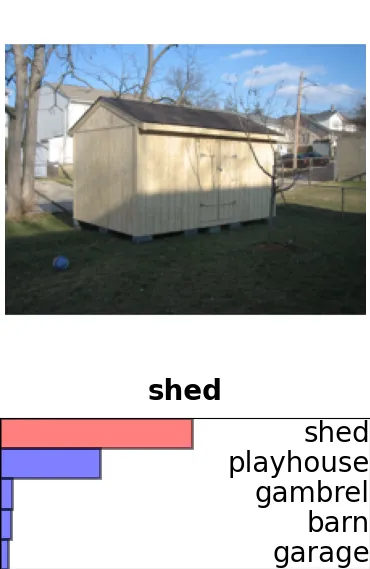

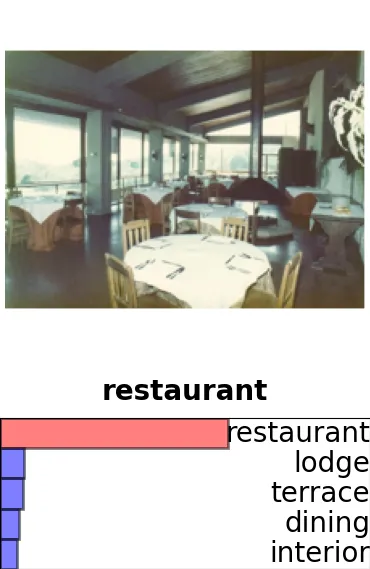

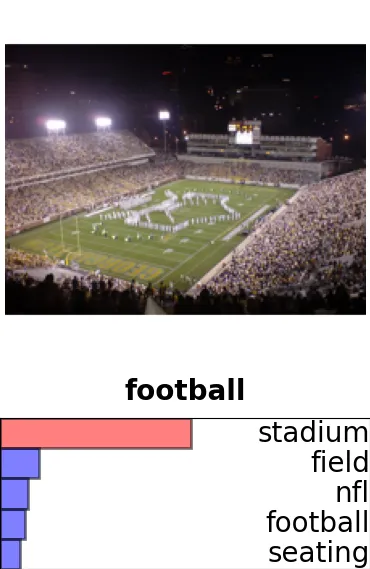

Qualitative results

We report some qualitative results of our method applied on three different datasets, namely Caltech-101 (first row), Food101 (second row), and SUN397 (last row), where the first is coarse, and the last two are fine-grained, focusing on food plates and places respectively. For each, we present a batch of five images, where the first three represent success cases and the last two show interesting failure cases. Each sample shows the image we input to our method with the top-5 candidate classes. Note that for each image CaSED generates an average of 35 candidate names, but we show only the five with the highest scores as computed in Eq. 6 in the main manuscript.